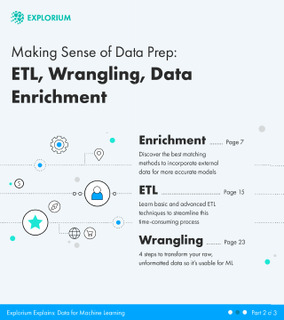

Data prep is an essential part of any ML lifecycle. However, it’s easy to think that sorting data into a database and running a few Python functions will do the trick. You may be right if you’re working with a small dataset or if your models are simply an academic exercise, but what if you’re dealing with production-ready models or datasets with hundreds of columns and thousands of rows? Before you get great insights from your models, you need to make sure your data is ready to deliver the goods. In part two of our series Explorium Explains: Data for Machine Learning, we’ll cover basic and advanced ETL techniques to streamline this time-consuming process, four steps to transform your raw, unformatted data, so it’s usable for ML, and the best matching methods to incorporate external data for more accurate models.